Does SAP HANA Complements or Competes with HADOOP stack??

While HANA is SAP's answer for Big Data,then it is interesting to see how does HANA fits into fits into Big data paradigm. In particular, we will talk about HADOOP stack of technologies in view of Big Data.

Welcome to world of unstructured data and HADOOP is ideal for processing large data sets & batch processing where response time is not a concern. Here Schema is not a concern as we are dealing with unstructured data and its not optimized for data updates/inserts. It works with principle of write once, read always. Also random reads are not supported and data can only be appended.

Having said above things, HANA follows for rows/columns storage model and we have to define schema before storing data. HANA is In-memory appliance thus supports faster read times/ on-the-fly aggregates/joins.

After highlighting merits of both worlds, we can see that both comes from different worlds; HANA from world of structured with fixed schema optimized for real time access , HADOOP from the world of unstructured data aimed at batch processing. Although there is overlap between them,it is minimal.

If we gather massive loads of unstructured data into HADOOP, MAP REDUCE jobs will output preliminary formatted data sets. These data sets can be inputted into HANA for further analysis. This gives us flexibility of faster access, BO tools can be leveraged for better visualizations. In this way we can get best of both worlds.

HADOOP can be used for processing massive data sets using complex algorithms resulting first level of processed data. This data can be used by business analysts for further conclusions with HANA achieving better response times.

If we are accessing a piece of data repeatedly, its better to materialize data in HANA for better response times.

Welcome to world of unstructured data and HADOOP is ideal for processing large data sets & batch processing where response time is not a concern. Here Schema is not a concern as we are dealing with unstructured data and its not optimized for data updates/inserts. It works with principle of write once, read always. Also random reads are not supported and data can only be appended.

Having said above things, HANA follows for rows/columns storage model and we have to define schema before storing data. HANA is In-memory appliance thus supports faster read times/ on-the-fly aggregates/joins.

After highlighting merits of both worlds, we can see that both comes from different worlds; HANA from world of structured with fixed schema optimized for real time access , HADOOP from the world of unstructured data aimed at batch processing. Although there is overlap between them,it is minimal.

If we gather massive loads of unstructured data into HADOOP, MAP REDUCE jobs will output preliminary formatted data sets. These data sets can be inputted into HANA for further analysis. This gives us flexibility of faster access, BO tools can be leveraged for better visualizations. In this way we can get best of both worlds.

HADOOP can be used for processing massive data sets using complex algorithms resulting first level of processed data. This data can be used by business analysts for further conclusions with HANA achieving better response times.

If we are accessing a piece of data repeatedly, its better to materialize data in HANA for better response times.

- SQOOP(SQL for HADOOP) is an ETL version for loading data in/out from HADOOP cluster to Relational Databases. It can read data from HDFS files, HIVE tables, AVRO fiels and will insert into HANA tables. So, If we have structured data residing in HADOOP cluster, SQOOP is ideal.

- SQOOP has a light weight command line interface and supports parallel Map-Reduce jobs. However, we need to choose an numeric field based on which SQOOP can divide data into disjoint sets. SQOOP will caluculate MIN, MAX values of the column and appropriately distributes load. SQOOP also supports incremental loading based on a field.

- We can also specify WHERE clause with conditions while Exporting/Importing data. However, we should have column names mapped, compatible data types between HADOOP cluster and HANA tables. Although we can perform certain transformations with SQOOP, they are basic and limited. For extensive data transformations, we can use additional layer of PIG, HIVE and then use SQOOP for transporting into HANA. However this will increase data footprint by one hop.

- We can also use SAP BODS to provision data from HDFS/HIVE into HANA. But SQOOP is easy to use and configure, also fast.

- FLUME process streaming data sources into HADOOP just like Sybase Event Stream processor

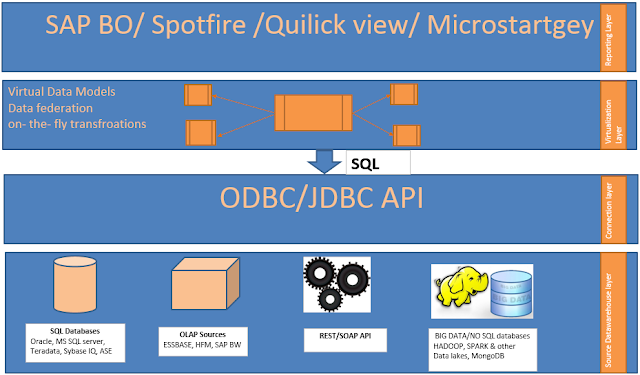

- SDA uses corresponding drivers for accesing remote data sources and pushes processing onto remote sources

- SP10 comes with lot of updates while accessing HDFS like SparkSql which is far better & faster for SQL queries when compared to HIVE and I am yet to have a look at it.

Comments

Post a Comment