Virtualization with SAP HANA

Virtualization

Most of Corporate Enterprise warehouse Eco system consists multiple disparate Data warehouses. reason can be attributed to mergers and acquisitions, departments using specialized software, databases choose to support specific data needs. All these scenarios result in building multiple data marts over multiple technologies. However, to serve the purpose of single source of truth, we end up building Enterprise Data Warehouse(EDW) by sourcing data from various data marts across organization. One way of doing is, running a project for establishing standard ETL methods to extract data from regional Data Marts and staging data into EDW. However various departmental/ regional DW already contains highly formatted, information rich and aggregated data built readily available. This implies that we might not need to1. Perform extensive transformations

2. Source data is meta data rich

3. Less data volumes will be retrieved from source systems

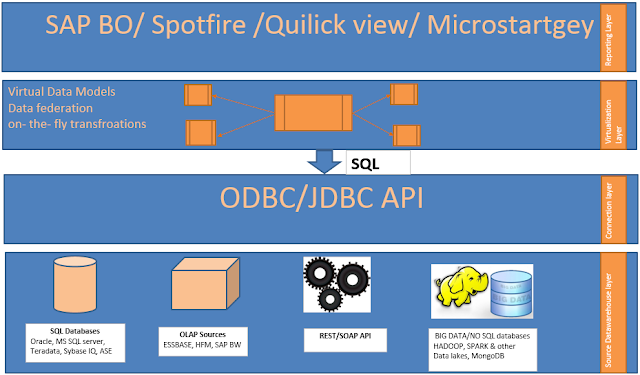

Following traditional ETL/ELT approach, we end up replicating data at multiple places. Also running ETL project will take standard times. This proposed a perfect use case for data virtualization. By using various virtualization technologies, we access source systems on-line with out replicating data into Target system. Virtualization techniques allows to perform limited transformations. However accessing data on-line might not be a good idea from response time SLA. But when virtualization combined with Massively parallel processing(MPP) techniques, In-Memory technologies makes it an option to perform transformations on-the-fly. This allows us to create virtual data models based on source data and perform transformation/apply business rules on-the-fly with out the need to persist/replicate data at multiple places.

As mentioned above, creating virtual data models promotes Agile Data Mart scenarios. Greatly reducing project time lines. By hiding much details about source systems, Virtual data mart layer provides a holistic perspective from all source systems. Also any changes on source system will be hide from Global reporting systems. Even when the source system is swapped/replaced, Global reports can still be used with out any changes.

Hence with virtualization we can achieve,

1. Less Data foot print

2. Agile Data Modelling

3. Data Federation

4. Relief from Source system release management/complexities

However below mentioned issues will be critical.

1. Response time SLA

2. Ability to perform complex transformations

3. User Security/Access management

4. Master Data management

Virtualization with SAP HANA/ SAP BW 7.4

SDA between NON-SAP systems

SAP HANA supports virtualization through Smart Data Access. BW 7.4 promotes virtualization aggressively through LSA++ and new breed of info providers; Composite provider, OpenODS view.Currently SAP HANA SDA supports Oracle, SQL Server, Teradata, Sybase IQ and HADOOP data sources. Corresponding ODBC drivers needs to be installed on HANA box. A background user with required authorizations needs to be created in source system and needs to be provisioned in HANA.

Required source database tables needs to be added as virtual tables into native HANA schema. Further these tables can be accessed just like native HANA tables. All hard coded filters, GROUP BY clause, aggregations will be pushed onto source database. However dynamic filters will not be pushed onto source database. Building statistics on virtual tables helps while performing joins among virtual tables and native HANA tables.

Also when joined with native HANA tables, in case of Sybase IQ, join relocation will be performed if the native HANA tale is small and making join entirely on source database will reduce network traffic. Also in case HADOOP, remote caching is enabled to cache output of MAP REDUCE job.

Explain Plan and PlazViz helps in identifying, optimizing Remote operations. output of remote join will be further processed on HANA server. Smart Data Access administration helps us to monitor connection status and generated remote query, run times.

We can make source system owners to build required joins/logic into database views to incorporate business logic. However when base tables added as virtual tables, the whole logic could be build into HANA column views. This avoids source system dependency for further changes.

OpenODS views

To expose virtual tables into BW perspective, OpenODS can be created on virtual tables as source. To perform further transformations, HANA tables could be exposed as data source. An Info source with required structure as OpenODS view shall be created. Transformation between data source and info source shall be created. An OpenODS view with source as this transformation could be created. Whenever OpenODS view is accessed the transformation will be executed on data from data source. However this is 100% virtual. OpenODS view bypasses PSA and directly executes underlying database views. However this might not agree with response SLA times. In this case, we might have to persist data.For performing business logic and complex transformations, we might have to persist at multiple levels BW. However these can be modeled as stack of calculation views in HANA. DSO can be imported as HANA views and further transformations can be performed in calculation views. These calculations will be performed on-the-fly with out staging data at intermediate levels. To further integrate these calculation views into BW perspective, they can be exposed as virtual providers, transient providers. Also an OpenODS view could be built to expose for reporting layer.

DBCONNECT vs ODP HANA Information Views Context

However sometimes, we might have to replicate data from HANA views into BW managed schema. HANA views can be consumed via DBCONNECT or ODP HANA Information views source system context. However ODP HANA Information views context is optimized for accessing HANA views. Large amount of data can be staged into BW schema with high performance. Also, when info provider built on these ODP data source, they support direct access DTP by passing PSA. Also ODP data source generic delta from HANA information views.

ODP BW Context for DATAMART scenario

Exporting data source is being currently for supporting data mart scenario currently between SAP BW systems. However, in this scenario, data shall be staged to PSA in target system. ODP BW context exposes all info providers natively to target system. No need to perform export data source in this scenario. Also these data sources support direct access DTP to info providers. There by avoiding staging data to PSA. This context even supports delta and delta queues can be monitored from ODQMON in source system. ODP queues will store data in compressed format and PSA can be avoided in target system.SDA between BW systems

All Info providers from source BW could be imported as HANA views. These HANA views could be accessed from target HANA system through SDA. This promotes 100% virtualization of data. But this limits possibility to perform complex transformations. Also achieving response time SLA is difficult. In these scenarios, data needs to be materialized and BW data mart scenario might be of help.

Comments

Post a Comment